The AI landscape is evolving rapidly beyond LLMs and chatbots. With the introduction of isolated AI models, developers are exploring the next steps in AI development: coordination and communication between different AI agents that provide more advanced automation capabilities for businesses.

2025 brings us new technology that enables connectivity between AI agents. In the last few months, you may have heard a new series of new three-letter acronyms in the AI development field. At the forefront of this development are protocols like MCP, A2A, and ACP.

Model Context Protocol (MCP), Agent-to-Agent Protocol (A2A), and Agent Communication Protocol (ACP) provide a path forward for multi-agent systems. Each of these protocols strategically targets different layers of multi-agent communication. They aren’t meant to exclude each other, but rather have different purposes and may augment one another, depending on organizational or business requirements.

While protocols like MCP, A2A, and ACP are the next step in AI evolution, they aren’t quite ready for “prime time” in sensitive enterprise uses for a variety of reasons, including security hurdles. This is not a vendor-specific problem, but an intrinsic issue related to the maturity of the underlying standards.

It’s also important to understand that none of these protocols is a magical band-aid for immature digital systems with pre-existing problems. Implementing an advanced AI solution into an unstable and immature API landscape will bring more havoc than benefit.

The effectiveness and stability of systems built upon advanced AI protocols will be heavily reliant on a strong foundation of mature API practices.

Understanding core AI protocols

Model Context Protocol (MCP) enables AI models to integrate with outside tools, APIs, and data sources, allowing companies to connect LLMs to their internal services. It gives AI agents the capability to request information or execute specific actions. Of the three above-mentioned protocols, it is the most mature one in the current agentic AI landscape, gaining traction, both in market adoption and developer engagement.

Yet, MCP still faces unresolved security challenges — one reason we joke it stands for “More Control, Please.” Authorization vulnerabilities create potential entry points for attackers, prompt injection threatens system reliability, and data leaks risk exposing sensitive information to unauthorized users.

Agent-to-agent Protocol (A2A) enables direct communication and collaboration between different AI systems. This primarily internally oriented architecture allows teams to set up sophisticated cross-platform collaboration between different AI agents within an enterprise environment.

A2A benefits from substantial industry support, but so far, the protocol remains in the early stages of development.

Agent Communication Protocol (ACP) brings a broader approach to AI communication. It targets decentralized and edge environments, and helps agents communicate across different systems, devices, and locations.

Out of the three, ACP is a newer and more complex standard, which tackles more challenging scenarios, and this complexity is still in early development. Organizations are still beginning to explore how to deploy ACP safely, particularly in distributed AI systems that handle sensitive information.

| Feature | MCP | A2A | ACP |

| Intended Use | LLMs with external data / tool needs | Multi-agent workflows across platforms | Edge AI, Embedded Systems, Offline Agents |

| Architecture | Client-Server | Client-Server with Agent Cards | Decentralized |

| Discovery Mechanism | Server Registry with Tools | Agent Card | Broadcast / Runtime registration |

| Transport Protocol | JSON-RPC 2.0 over HTTPS | JSON-RPC 2.0 over HTTPS | IPC, ZeroMQ, gRPC |

| Security Model | App-layer Auth, Oauth 2, scoped APIs | Oauth2, scoped endpoint | Sandboxing, Private Network |

| Example Use Case | Connecting an LLM to internal applications | Distributed, collaborating agents | On-device coordination of multiple agents |

| Adoption Readiness | Early Adopter | Early Adopter | Proof of Concept |

Prime time isn’t here yet

While these protocols are developing quickly, they’re not yet ready for widespread enterprise integration, especially where sensitive data is involved. At the current stages of development, these protocols offer huge potential, but also make systems vulnerable to new, complex risks.

AI’s strength is also its weakness. The ability of agents to act, interpret, and communicate autonomously opens Pandora’s box of potential errors that organizations must carefully navigate. Underdeveloped AI systems are susceptible to data poisoning attacks, or corruption of information that AI learns from, which can alter how agents behave. Simple requests may turn into potential security breaches.

“We are very much in the hype curve phase and, although this feels different from some of the technology waves of the past – it’s still important to have a balanced set of expectations and act strategically.” – Brian Otten, VP Digital Transformation

Organizations exploring multi-agent deployment must understand that while these protocols have the revolutionary potential for business transformation, they require a solid foundation and acceptance of higher risk. Integrating these protocols needs a mature API infrastructure, strict internal controls, and specialized security knowledge.

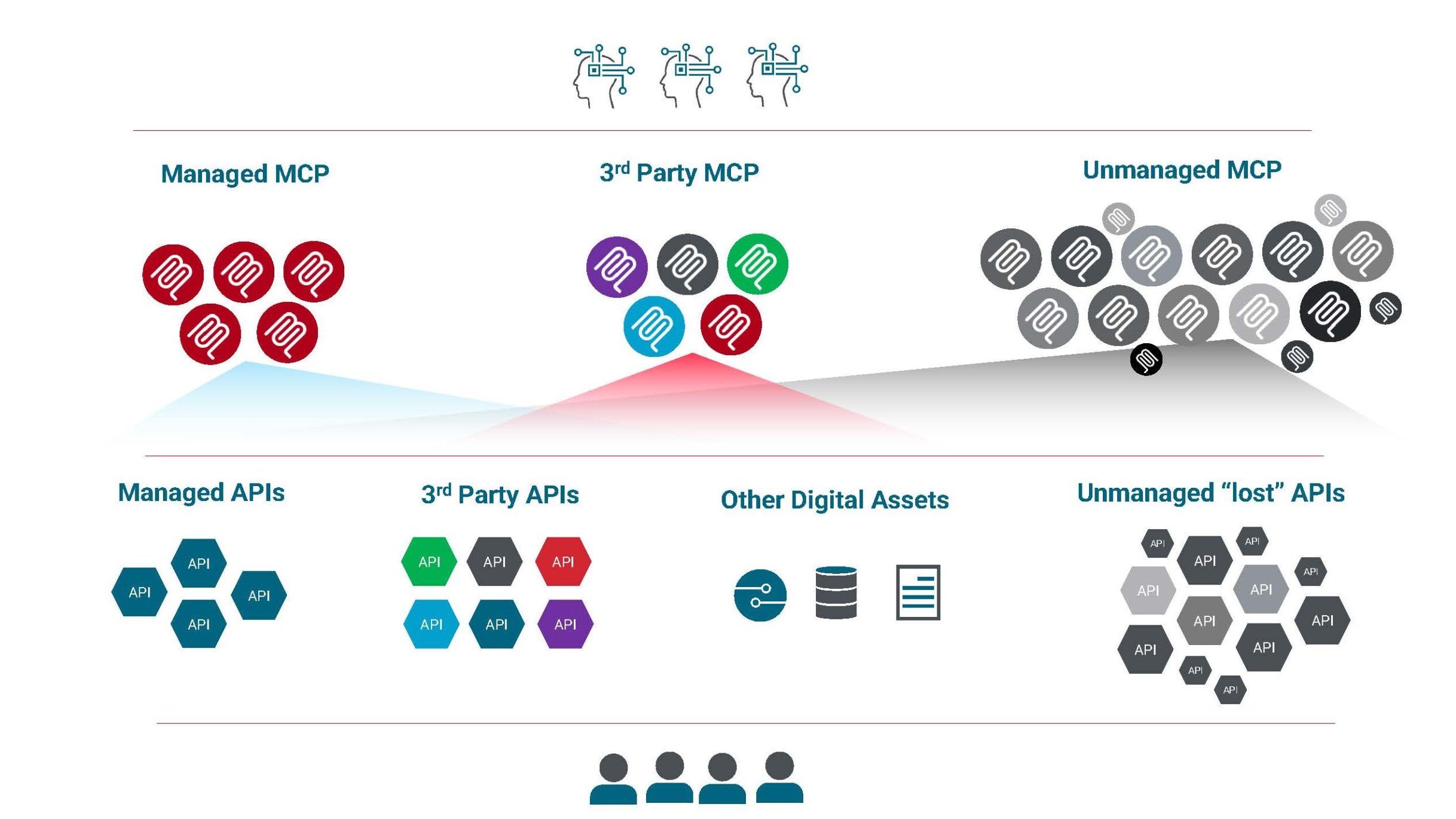

Zooming in on MCP as an example – MCP promises to offer to wrap existing APIs and other digital assets and expose their functionalities in a standardized way, bringing some order in the chaos and normalizing the access for LLMs. But it doesn’t solve the chaos itself.

If anything, it adds to it, with more digital assets, from different parties, layered on top of your API landscape – hopefully managed.

The reality is that with a brittle API foundation, MCP won’t be getting you the outcomes you are looking for – in line with the garbage in, garbage out principle. There is a whole new landscape of chaos arising – with more security challenges due to the foundational engagement logic behind it. API maturity and governance are more important than ever.

Success requires building comprehensive security architectures that extend beyond built-in protections, focusing on exposure limitations and multi-layered defense strategies.

Non-deterministic integration brings new (governance) challenges

Unlike traditional digital systems that operate in highly predictable and repeatable patterns, AI agents represent a fundamental shift from deterministic to probabilistic integration patterns. This non-deterministic nature introduces significant challenges across the entire lifecycle.

Unpredictable outputs: Unlike conventional systems that produce predictable outputs, AI agents can generate different responses to identical inputs. Traditional testing, where you expect an exact match, becomes ineffective, creating unprecedented challenges in quality assurance and validation.

Lack of repeatability for testing: This lack of repeatability makes consistent testing and reproducing bugs extremely difficult. If a test fails once, you can’t be sure if it’s a real bug that needs to be fixed or a random variation in the model’s output.

Opaque reasoning (the black box problem): AI’s complex and non-transparent decision-making exacerbates these difficulties. When agents chain multiple reasoning steps and interact with internal tools, the “black box” problem makes it hard to understand why an agent took a particular action or reached a certain conclusion.

Error handling & recovery: The dynamic and autonomous nature of agentic AI means it can make decisions and take actions that aren’t explicitly coded but based on its goals and provided context. It opens these models and protocols to additional security threats, like prompt injection that can manipulate the agent into performing unauthorized tasks.

Challenging security & oversight: AI’s non-deterministic nature also makes it harder to predict the exact impact on integrated systems when an AI model or its behavior changes, for example, after a third-party LLM updates its version. This complicates version control, regression testing, and reliable rollbacks if issues arise.

At this early stage, enterprises need to evaluate their organizational readiness to decide if the agentic AI route is the right solution today.

“Since LLMs (Large Language Models) are good at the understanding of text and language, it is important to know the limits of how they are using Chain-of-Thought reasoning and other applied techniques. LRMs (Large Reasoning Models) are where the real problem solving is: step-by-step thinking, impact, and cause and effect.” – Brian Otten, VP Digital Transformation

See also: how to get the maximum business value from your APIs

Security gaps: extending OAuth for agentic AI

AI’s delegated authority, or its nature of acting “on behalf of” its user, creates the biggest security challenge to the immediate implementation of multi-system AI protocols.

The agentic nature is much more complicated and riskier in the dynamic world of AI than in traditional app-to-app communication. The OAuth 2.0 standard, while good for human access, isn’t enough for AI agents.

Current OAuth tools are a good start, but they need big upgrades for AI. It’s critical to verify not just the agent but who gave permissions and what was allowed. An AI agent can perform unauthorized action accidentally, if it interprets a request differently than intended, or with malicious intent it was hacked by attackers.

AI agents need very specific, dynamic access, and current OAuth permissions may be too general. Given that these protocols involve a chain of AI agents interacting with each other, proving who (or what) did what, and if it was authorized, is crucial for auditing. This requires better logging and cryptographic proof at each step.

OAuth 2.1 is currently a draft specification that improves the baseline security of how AI agents obtain tokens to interact with MCP servers.

However, AI’s interpretative nature, dynamic action selection, and complex delegation chains introduce security challenges that extend beyond what a pure authorization protocol like OAuth 2.1 can solve on its own. It requires a combination of robust OAuth implementation, careful architectural design (separating Authorization and Resource Servers), AI-specific security controls like prompt injection defenses, stringent data governance, and continuous monitoring.

How can enterprises start implementing agentic AI?

The “complete answer” for MCP security is an evolving suite of technologies, tools, and best practices.

Aside from support for the new protocols, our focus at Axway is on providing our customers the foundational tools: robust, federated API management for better governance, seamless integration capabilities to connect fragmented systems, and advanced data quality features. We help customers standardize their API landscape, build secure integration pathways, and ensure clean data for Agentic AI.

We are focused on transforming the new “spaghetti” layer into a structured, reliable backbone. We are building out support for Model Context Protocol (MCP) and will facilitate Agent-to-Agent (A2A) communication to create bridges for agents to collaborate and exchange information.

With Amplify Engage and Fusion, we are extremely well-positioned to provide an experience that is matching Enterprise expectations, and naturally expands Engage as the digital engagement hub for customer’s API ecosystems, both traditional and agentic.

Axway prioritizes customer system security above all else when developing transformative AI solutions that push technological boundaries. Amplify AI Gateway accelerates your AI journey, while maintaining our commitment to comprehensive protocol support that meets evolving customer requirements.

Through collaboration with our early adopters, we keep in lockstep with rapidly developing market demands while protecting our customers’ interests. This customer-centric approach enables organizations to confidently embrace cutting-edge AI capabilities while maintaining the robust security postures that modern enterprises demand.

Explore how Axway integrates cutting-edge AI innovation with Amplify AI Gateway.